If you are running in a corporate environment or are migrating a Kubernetes cluster from an overlay network to Amazon EKS, you most likely have a question:

“What do you mean the Pods and EC2 Instances are given IPs out of the same CIDR range?”

First, this is perfectly natural. Amazon EKS leverages all of the features, security, and simplicity of the Amazon VPC. This is an advantage for customers becaues you can leverage all of your existing security tooling that is running within your network and simplify routing between pods and your wider network.

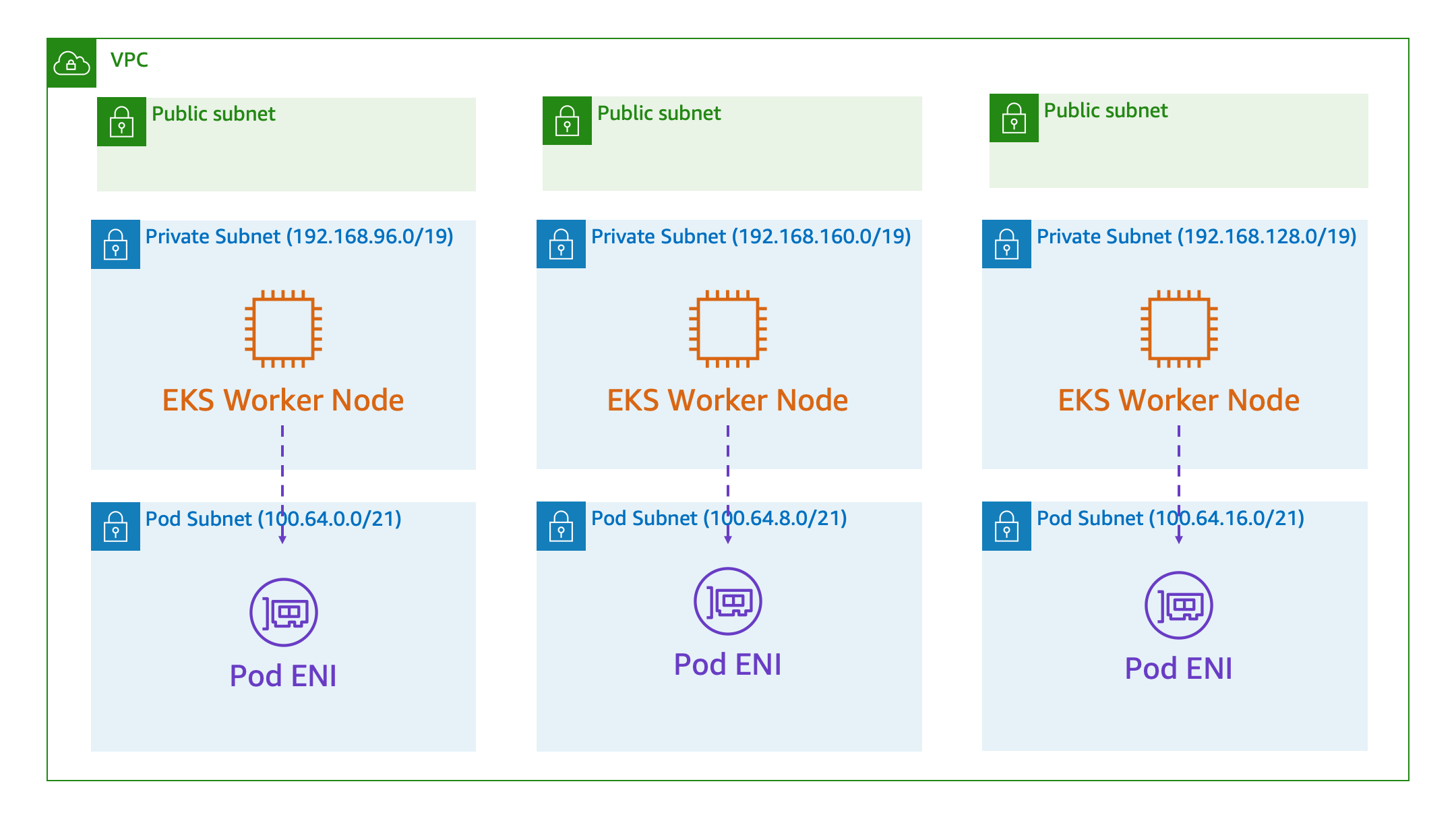

However, for some organizations, they don’t have /16 ranges to give out to every Kubernetes cluster within their network. For this reason, Amazon EKS supports using Secondary CIDR Ranges, another feature of Amazon VPC. Secondary CIDR ranges are an additional set of subnets deployed to the same availability zones as your existing private subnets. The only difference, these subnets use a secondary CIDR range, rather than the primary CIDR range attached to the VPC.

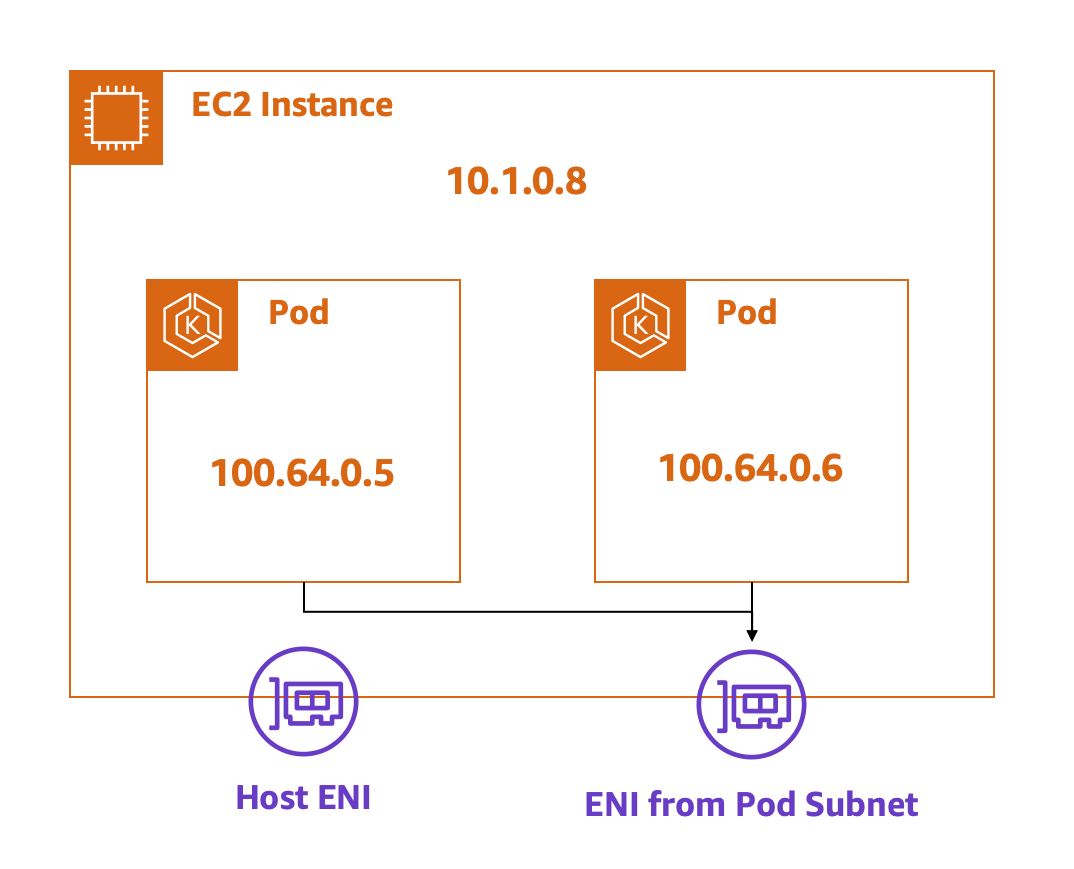

This works by attaching Elastic Network Interfaces (ENI) to EC2 instances running in the primary subnet while the ENIs live in the secondary CIDR range. Because the VPC CNI driver can’t leverage the primary ENI attached to the host we need to adjust the max pods allowed to run the EC2 Instance. This is because there is a limit to the number of ENIs that can be attached to an instance and the number of private IPs that can be assigned to an ENI.

We can calculate the proper value using the following formula. I am using a t3.large, if you are using a different instance type you can look up the values for this formula on the ENI Docs.

# instance type: t3.large

# number of enis: 3

# number of private ips: 12

maxPods = (3 - 1) * (12 - 1) + 2 = 20

Now that we have the max number of pods, we are ready to deploy our cluster. We will leverage eksctl to deploy a cluster without a node group. We will be adding a node group later.

eksctl create cluster --name secondary-cidr --without-nodegroup --region us-west-2

Once the cluster has been deployed, let’s store the VPC ID in an environment so we can reference it later. You can get this value from the console.

export EKSCTL_VPC_ID=vpc-0129683abbced1fa8

Now, let’s associate a secondary CIDR range with the VPC. eksctl creates the cluster using a 192.168.0.0/16 CIDR block, which means we can associate a 100.64.0.0/16 to the VPC. Run the follow command, to add this CIDR range to the VPC.

aws ec2 associate-vpc-cidr-block \

--vpc-id $EKSCTL_VPC_ID \

--cidr-block 100.64.0.0/16 \

--region us-west-2

Now let’s create the subnets that we will use to allocate IPs for our pods. We will leverage the secondary CIDR range we just attached and use /21 blocks for each of our subnets. This will give us room for about 2000 pods per subnet. When you run these commands, keep track of the Subnet ID and Availability Zone.

aws ec2 create-subnet \

--vpc-id $EKSCTL_VPC_ID \

--availability-zone us-west-2a \

--cidr-block 100.64.0.0/21 \

--region us-west-2

aws ec2 create-subnet \

--vpc-id $EKSCTL_VPC_ID \

--availability-zone us-west-2b \

--cidr-block 100.64.8.0/21 \

--region us-west-2

aws ec2 create-subnet \

--vpc-id $EKSCTL_VPC_ID \

--availability-zone us-west-2c \

--cidr-block 100.64.16.0/21 \

--region us-west-2

Next, we need to tell the VPC CNI driver that we want to use custom networking. Custom networking is the name for this configuration.

kubectl set env daemonset aws-node -n kube-system AWS_VPC_K8S_CNI_CUSTOM_NETWORK_CFG=true

kubectl set env daemonset aws-node -n kube-system ENI_CONFIG_LABEL_DEF=failure-domain.beta.kubernetes.io/zone

In order to ues custom networking, VPC CNI uses a Custom Resource Definition (CRD) for the subnet configuration. Let’s add this CRD to our cluster.

# file: ENIConfig.yml

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: eniconfigs.crd.k8s.amazonaws.com

spec:

scope: Cluster

group: crd.k8s.amazonaws.com

version: v1alpha1

names:

plural: eniconfigs

singular: eniconfig

kind: ENIConfig

kubectl apply -f ENIConfig.yml

We are almost there! We have deployed an EKS cluster without any nodes, added secondary subnets to our VPC to address our pods, and have deployed the CRD needed to configure the VPC CNI Driver. We are now ready to configure the CNI driver. To get started, we need to deploy a node group, notice we used the max pods value we calculated earlier in the post.

eksctl create nodegroup \

--cluster secondary-cidr \

--region us-west-2 \

--name ng-1 \

--nodes 3 \

--max-pods-per-node 20 \

--node-private-networking

Once that node group has been deployed, we need to get the ID of the Security Group that is attached to the node group instances. Replace the Security Group ID below with the value that you retrieve from your configuration. Finally, update the subnet values with IDs from the subnet commands we ran earlier in this guide. Make sure the subnet IDs match the availability zone they are deployed into. Your configuration should look similar to the configuration below. Do not change the names of these configurations, this name is used to automatically wire up the configurations with new instances.

# file: us-west-2-eniconfigs.yml

# us-west-2a

apiVersion: crd.k8s.amazonaws.com/v1alpha1

kind: ENIConfig

metadata:

name: us-west-2a

spec:

subnet: subnet-04fb419e1235005d1

securityGroups:

- sg-0dd6ec91e081aa4c7

---

# us-west-2b

apiVersion: crd.k8s.amazonaws.com/v1alpha1

kind: ENIConfig

metadata:

name: us-west-2b

spec:

subnet: subnet-05d89d2dd1e81c713

securityGroups:

- sg-0dd6ec91e081aa4c7

---

# us-west-2c

apiVersion: crd.k8s.amazonaws.com/v1alpha1

kind: ENIConfig

metadata:

name: us-west-2c

spec:

subnet: subnet-0819e3360cc95fab5

securityGroups:

- sg-0dd6ec91e081aa4c7

kubectl apply -f us-west-2-eniconfigs.yml

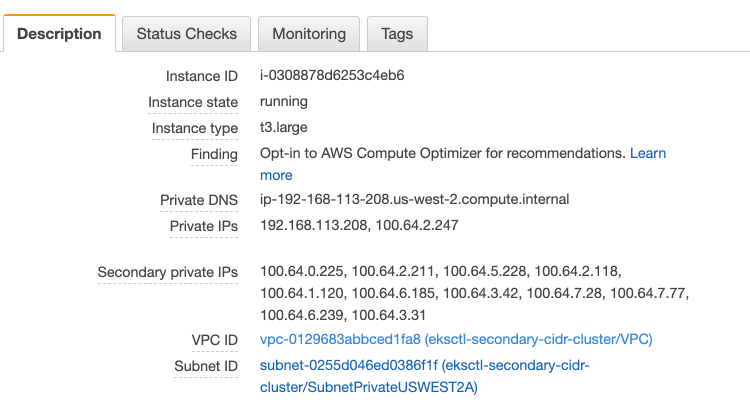

Now, terminate all of the instances that are associated with the node group so that fresh instances are deployed. When the new instances come up you should the following IP address allocation in the console.

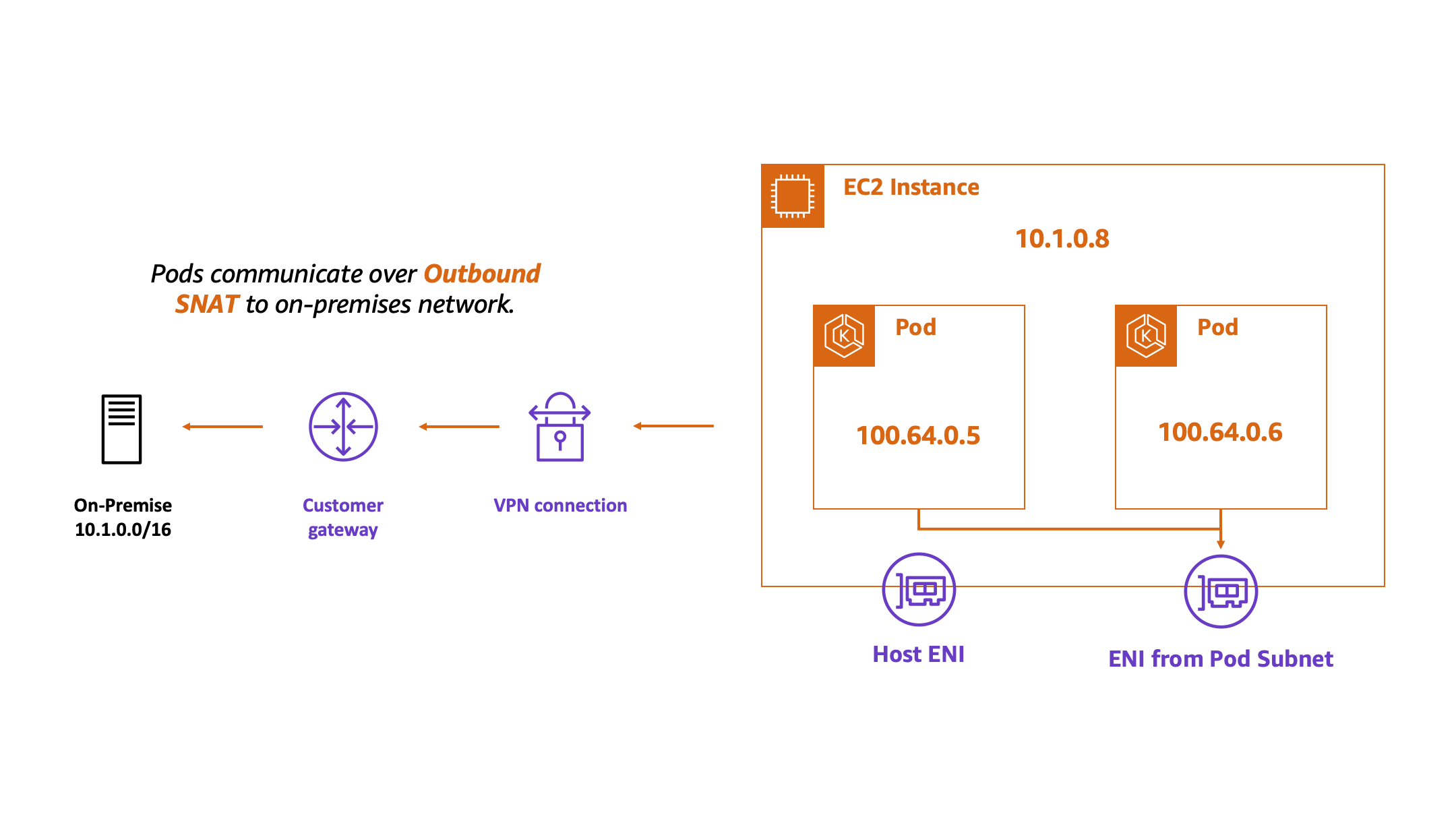

That’s it! Now instances will be addressed out of our primary CIDR range and our pods will be addressed out of our secondary CIDR. This will prevent your pods from consuming valuable corporate IP space while still allowing communication back to the corporate network. This is translation is handled via outbound source NATing, that is handled by the CNI driver.

If you have any questions about this configuration, don’t hesitate to reach out!